In Part 1 of the TwitterFiles, we learned that Twitter was working with politicians, federal agencies, and Biden’s campaign team to censor information on Twitter – specifically as related to Hunter Biden’s laptop and the ensuing New York Post story.

In Part 2, we learned that teams of Twitter employees build blacklists, prevent disfavored tweets from trending, and actively limit the visibility of entire accounts or even trending topics—all in secret, without informing users. In other words, “blacklisting” and “shadowbanning” were real, despite congressional testimony by people like Jack Dorsey claiming otherwise.

In Part 3, we revealed how Twitter employees and directors escalated their censorship campaign in January 2020… and how they effectively threw out the rulebook in favor of their own partisan ideals.

In Part 4, we’ll learn how Twitter executives built the case for a permanent ban – something unprecedented for a world leader up to that point. HUGE thanks to Michael Shellenberger for the stellar reporting.

On Jan 7, senior Twitter execs:

– create justifications to ban Trump

– seek a change of policy for Trump alone, distinct from other political leaders

– express no concern for the free speech or democracy implications of a ban

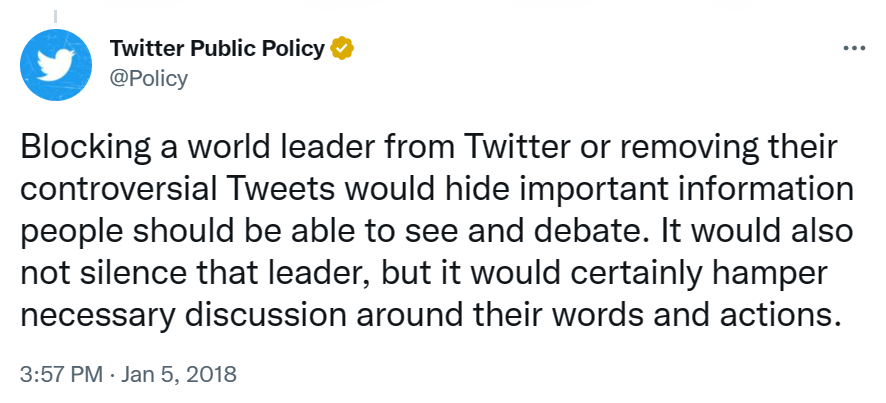

For years, Twitter had resisted calls to ban Trump.

“Blocking a world leader from Twitter,” it wrote in 2018, “would hide important info… [and] hamper necessary discussion around their words and actions.”

But after the events of Jan 6, the internal and external pressure on Twitter CEO @jack grows. Former First Lady @michelleobama , tech journalist @karaswisher , @ADL, high-tech VC @ChrisSacca, and many others, publicly call on Twitter to permanently ban Trump.

Dorsey was on vacation in French Polynesia the week of January 4-8, 2021. He phoned into meetings but also delegated much of the handling of the situation to senior execs @yoyoel, Twitter’s Global Head of Trust and Safety, and @vijaya Head of Legal, Policy, & Trust.

As context, it’s important to understand that Twitter’s staff & senior execs were overwhelmingly progressive. In 2018, 2020, and 2022, 96%, 98%, & 99% of Twitter staff’s political donations went to Democrats (This is detailed in Part 1).

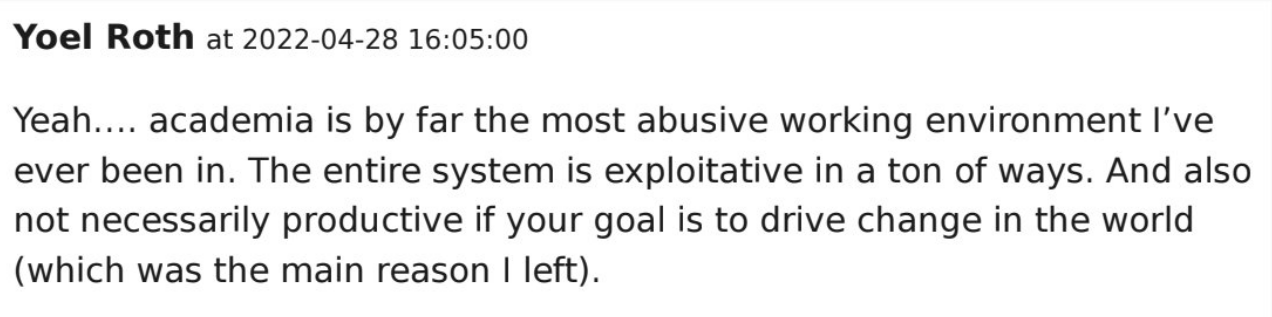

In 2017, Roth tweeted that there were “ACTUAL NAZIS IN THE WHITE HOUSE.” In April 2022, Roth told a colleague that his goal “is to drive change in the world,” which is why he decided not to become an academic.

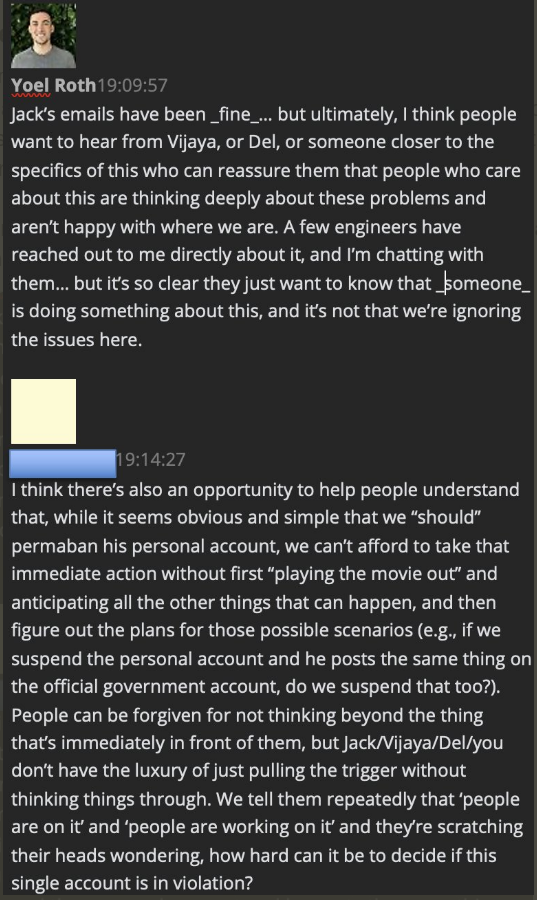

On January 7, Jack emails employees saying Twitter needs to remain consistent in its policies, including the right of users to return to Twitter after a temporary suspension

After, Roth reassures an employee that “people who care about this… aren’t happy with where we are”

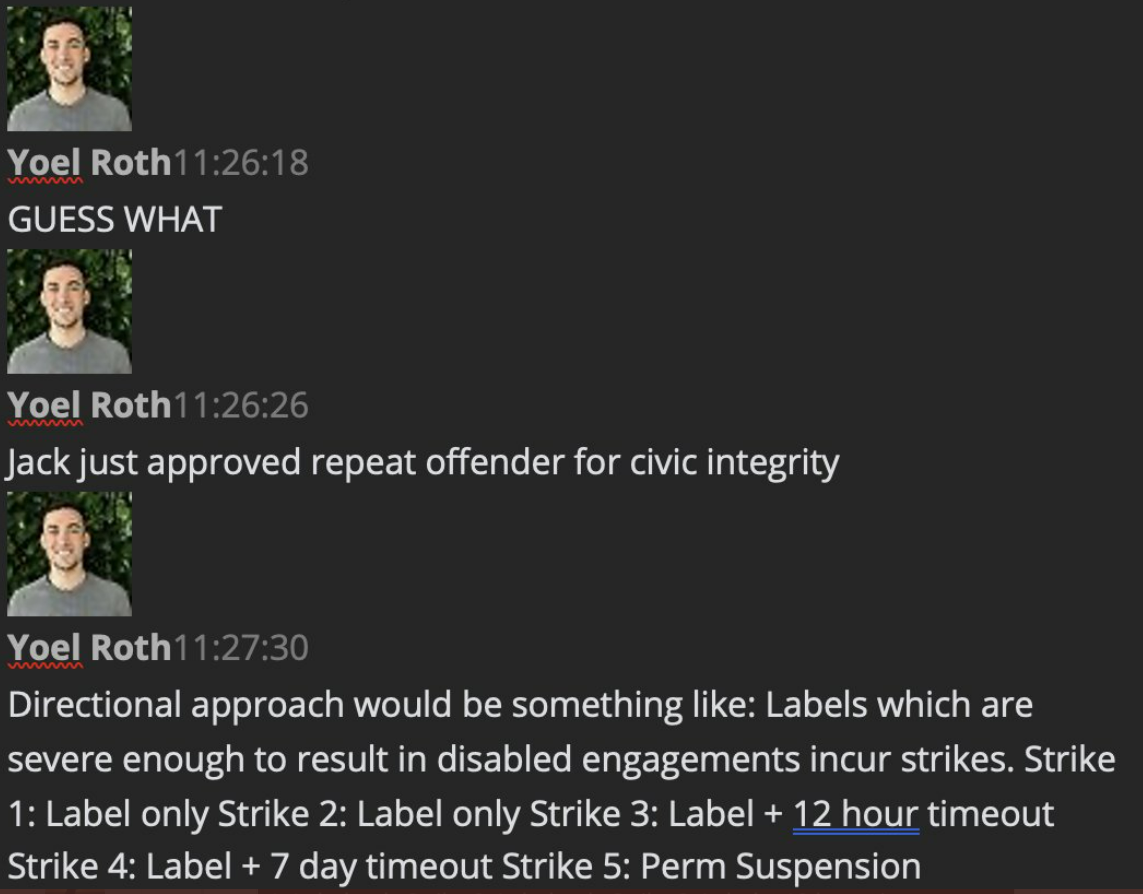

Around 11:30 am PT, Roth DMs his colleagues with news that he is excited to share.

“GUESS WHAT,” he writes. “Jack just approved repeat offender for civic integrity.”

The new approach would create a system where five violations (“strikes”) would result in permanent suspension.

“Progress!” exclaims a member of Roth’s Trust and Safety Team.

The exchange between Roth and his colleagues makes clear that they had been pushing Jack for greater restrictions on the speech Twitter allows around elections.

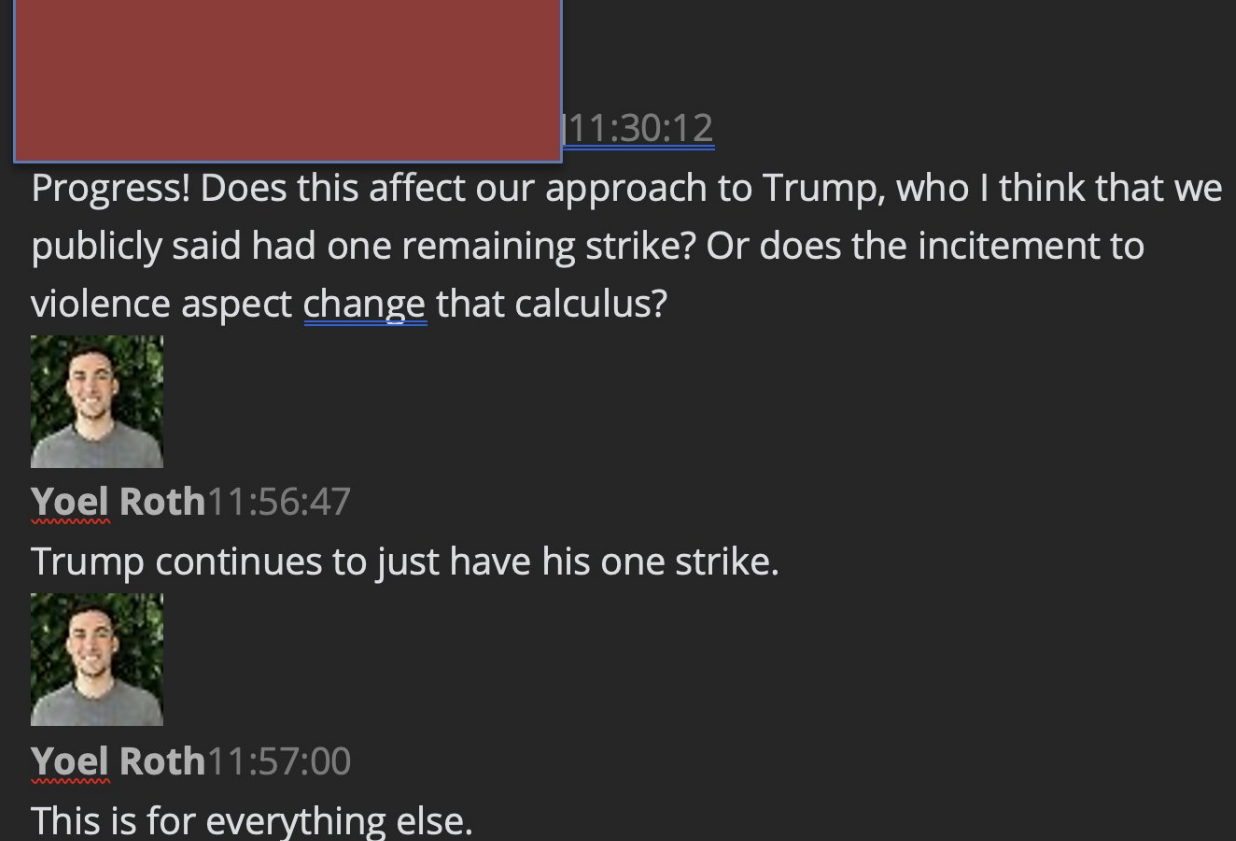

The colleague wants to know if the decision means Trump can finally be banned. The person asks, “does the incitement to violence aspect change that calculus?”

Roth says it doesn’t. “Trump continues to just have his one strike” (remaining).

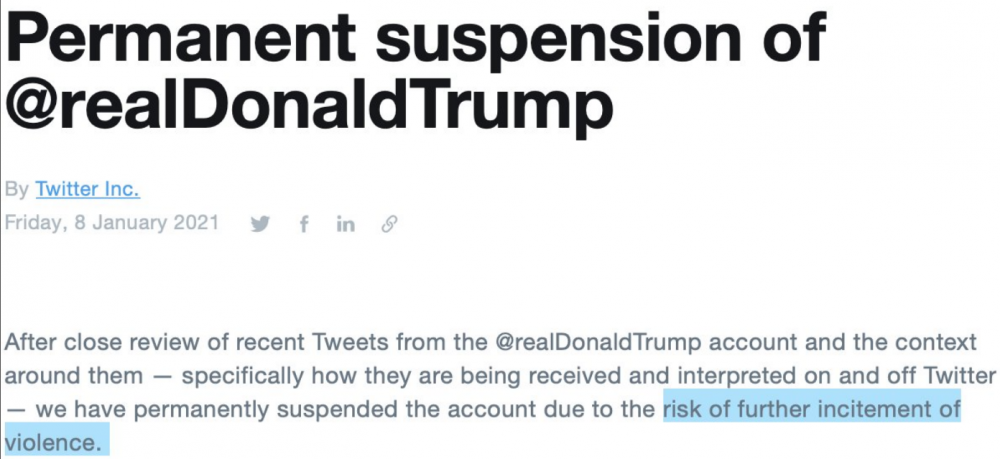

Roth’s colleague’s query about “incitement to violence” heavily foreshadows what will happen the following day. On January 8, Twitter announces a permanent ban on Trump due to the “risk of further incitement of violence.”

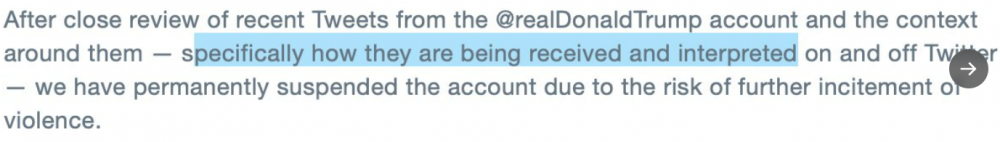

On January 8th, Twitter says its ban is based on “specifically how [Trump’s tweets] are being received & interpreted.”

But in 2019, Twitter said it did “not attempt to determine all potential interpretations of the content or its intent.”

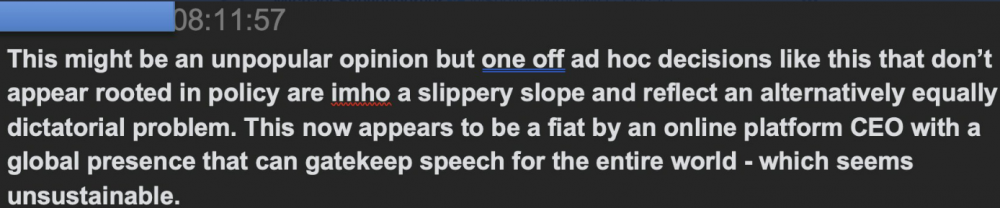

The *only* serious concern we found expressed within Twitter over the implications for free speech and democracy of banning Trump came from a junior person in the organization. It was tucked away in a lower-level Slack channel known as “site-integrity-auto.”

“This might be an unpopular opinion but one off ad hoc decisions like this that don’t appear rooted in policy are imho a slippery slope… This now appears to be a fiat by an online platform CEO with a global presence that can gatekeep speech for the entire world…”

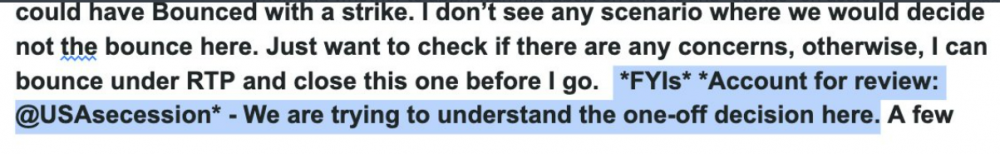

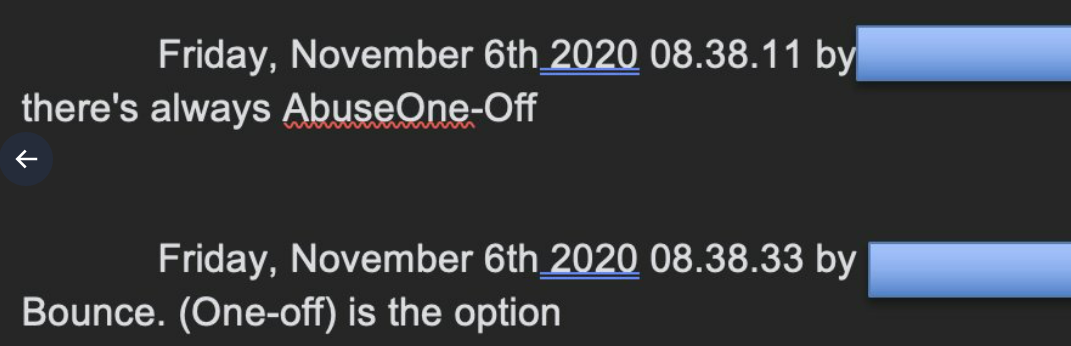

Twitter employees use the term “one off” frequently in their Slack discussions. Its frequent use reveals significant employee discretion over when and whether to apply warning labels on tweets and “strikes” on users. Here are typical examples.

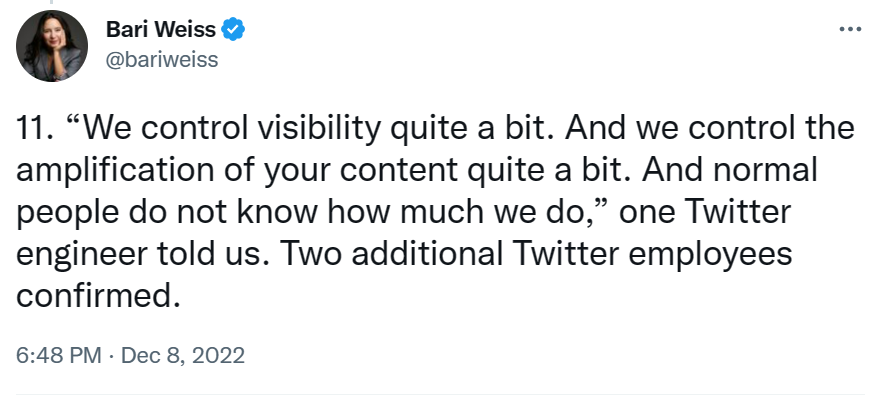

You may recall from Part 2 that, according to Twitter staff, “We control visibility quite a bit. And we control the amplification of your content quite a bit. And normal people do not know how much we do.”

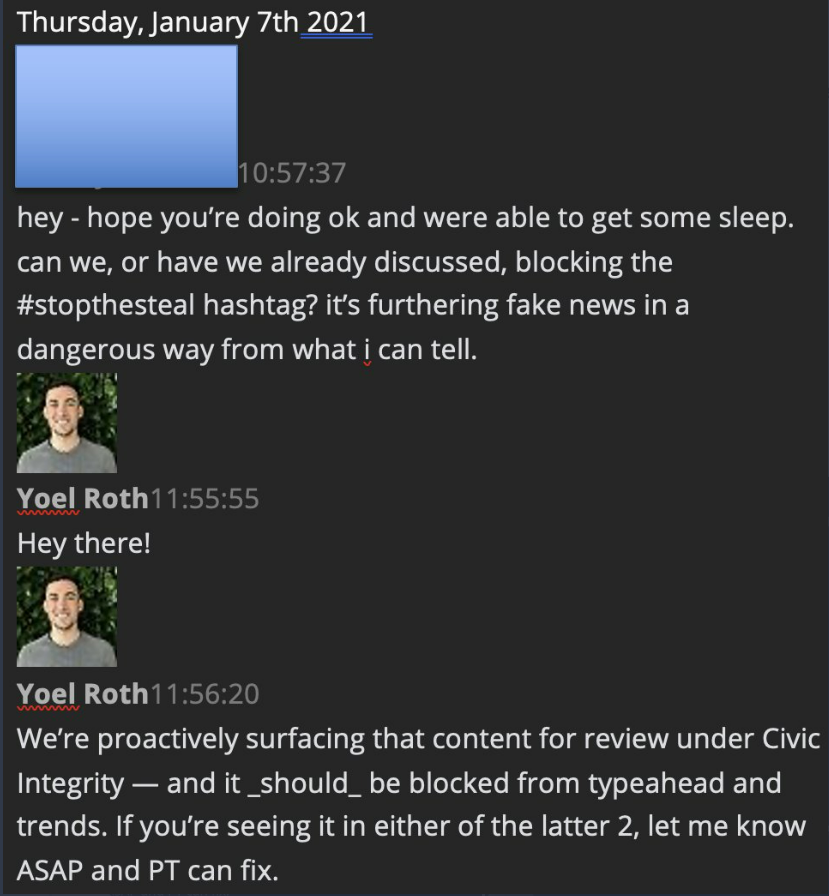

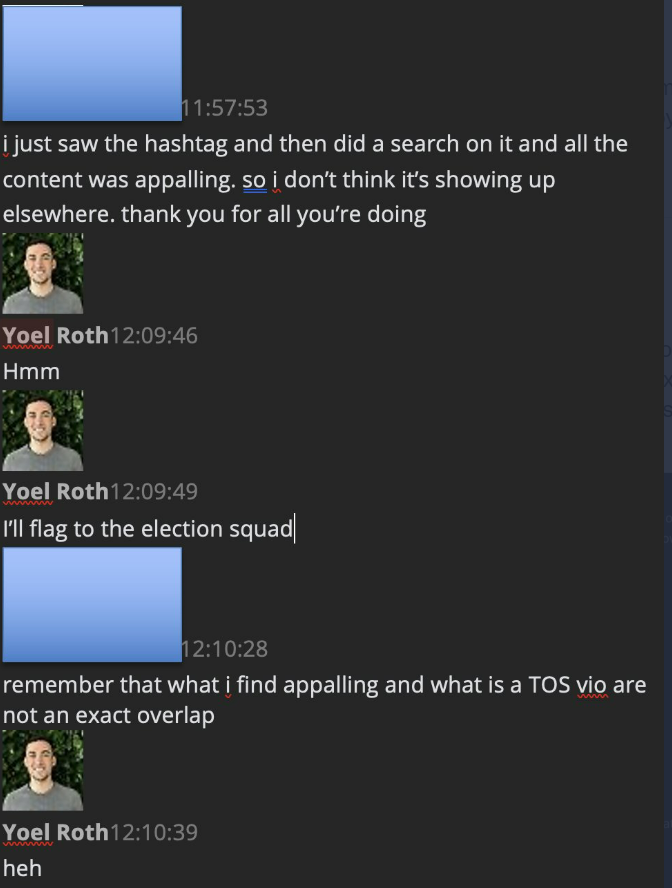

Twitter employees recognize the difference between their own politics & Twitter’s Terms of Service (TOS), but they also engage in complex interpretations of content in order to stamp out prohibited tweets, as a series of exchanges over the “#stopthesteal” hashtag reveal.

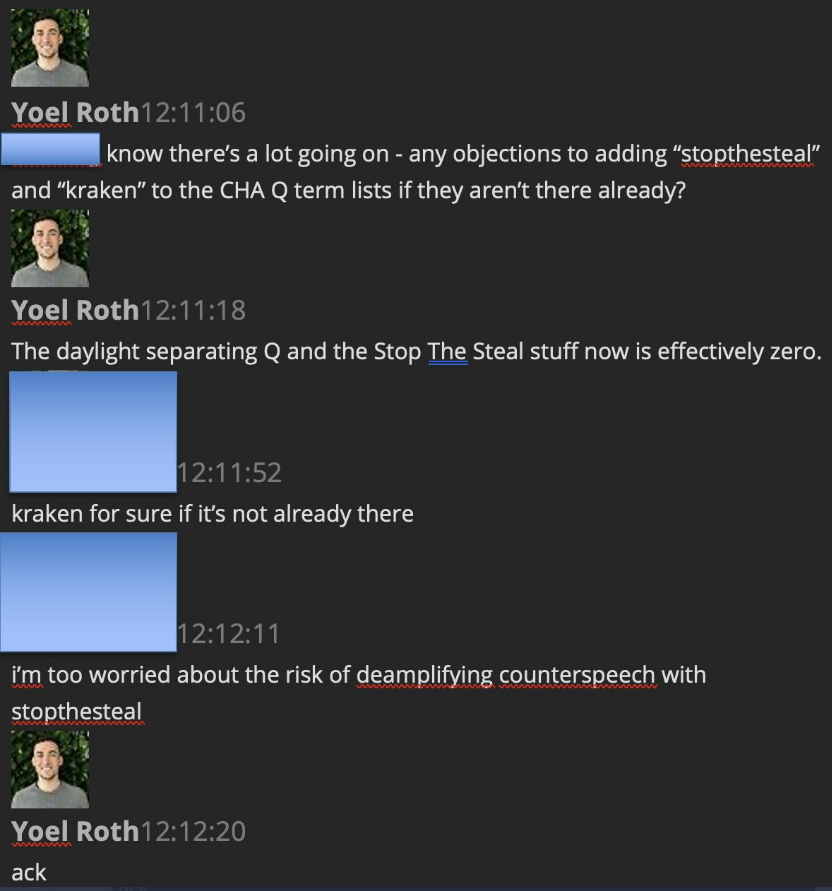

Roth immediately DMs a colleague to ask that they add “stopthesteal” & [QAnon conspiracy term] “kraken” to a blacklist of terms to be deamplified.

Roth’s colleague objects that blacklisting “stopthesteal” risks “deamplifying counterspeech” that validates the election.

Indeed, notes Roth’s colleague, “a quick search of top stop the steal tweets and they’re counterspeech”

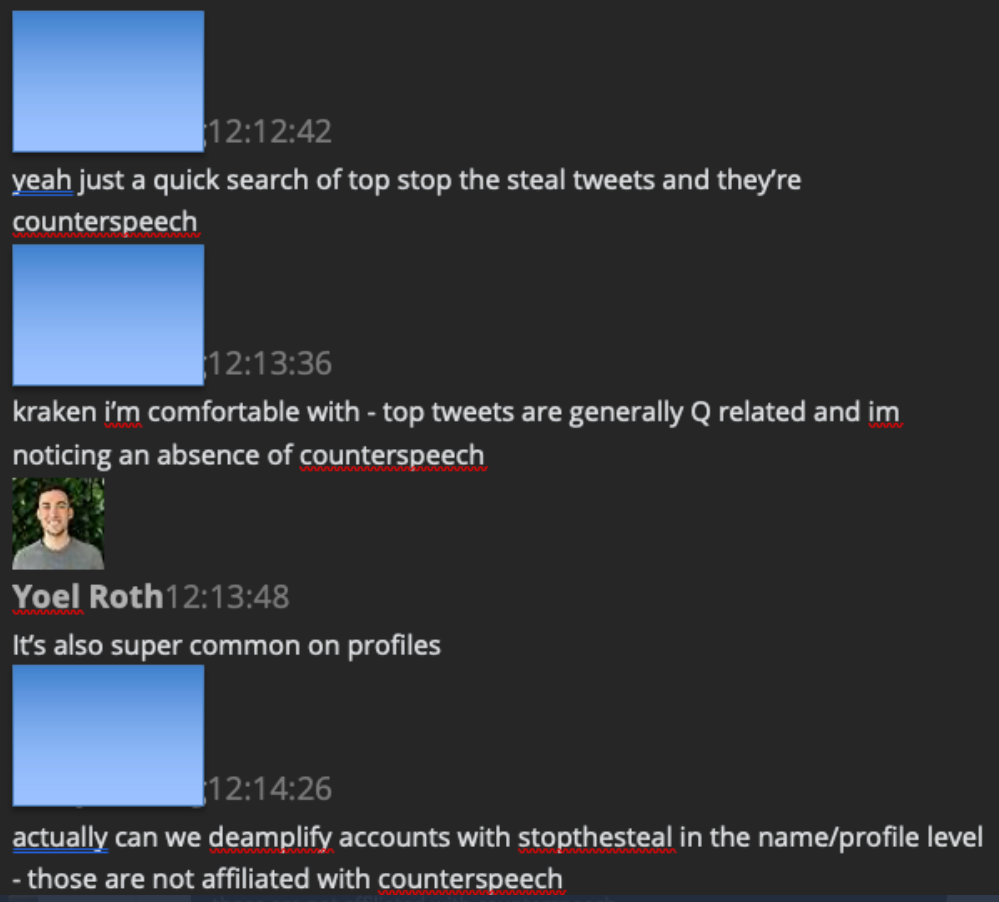

But they quickly come up with a solution: “deamplify accounts with stopthesteal in the name/profile” since “those are not affiliated with counterspeech”

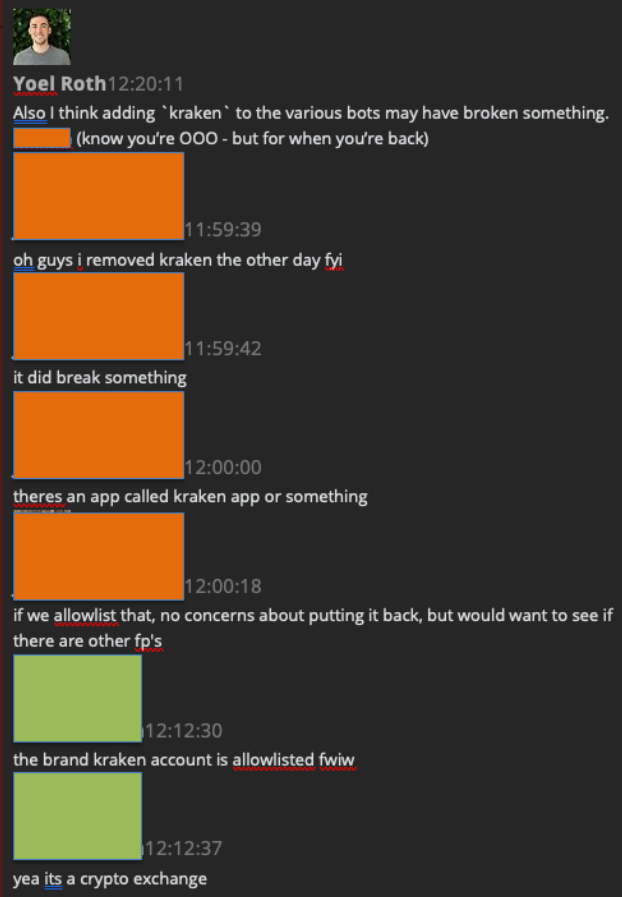

But it turns out that even blacklisting “kraken” is less straightforward than they thought. That’s because kraken, in addition to being a QAnon conspiracy theory based on the mythical Norwegian sea monster, is also the name of a cryptocurrency exchange, and was thus “allowlisted”

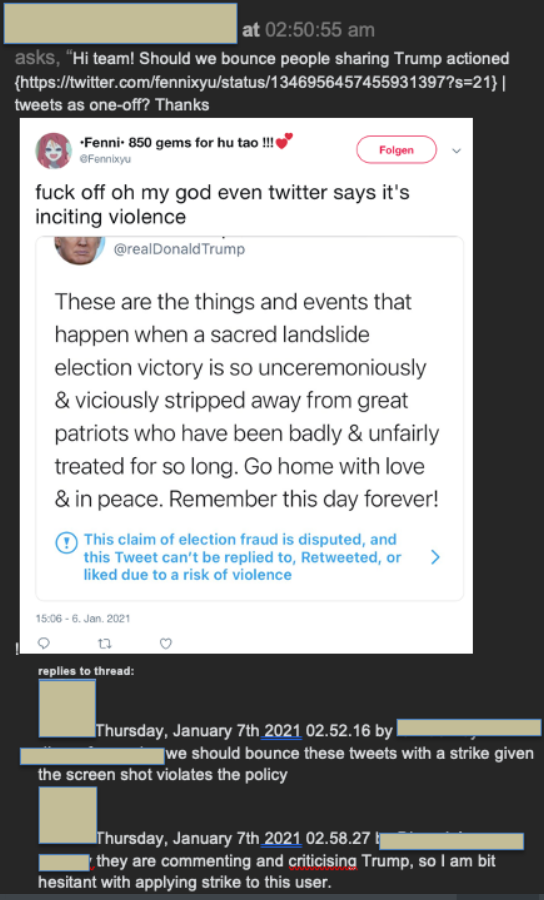

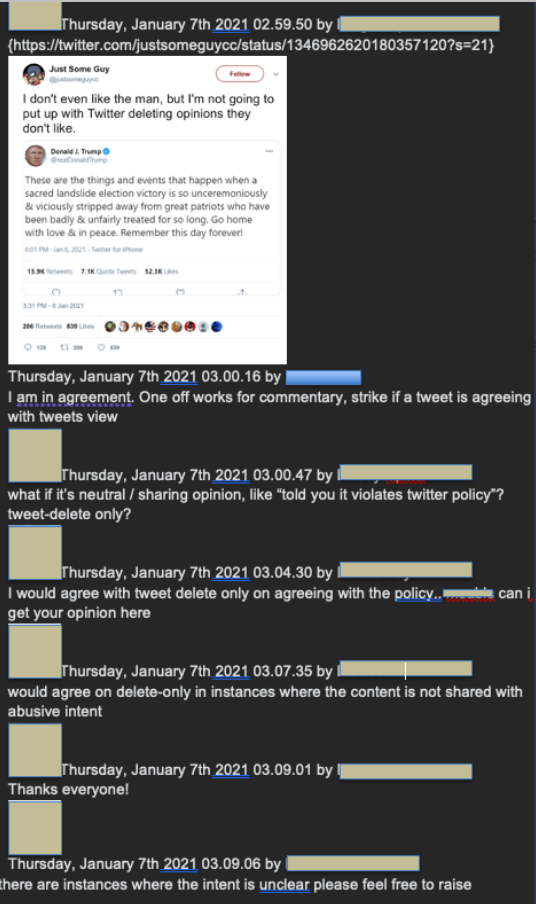

Employees struggle with whether to punish users who share screenshots of Trump’s deleted J6 tweets

“we should bounce these tweets with a strike given the screen shot violates the policy”

“they are criticising Trump, so I am bit hesitant with applying strike to this user”

What if a user dislikes Trump and objects to Twitter’s censorship? The tweet still gets deleted. But since the *intention* is not to deny the election result, no punishing strike is applied.

“if there are instances where the intent is unclear please feel free to raise”

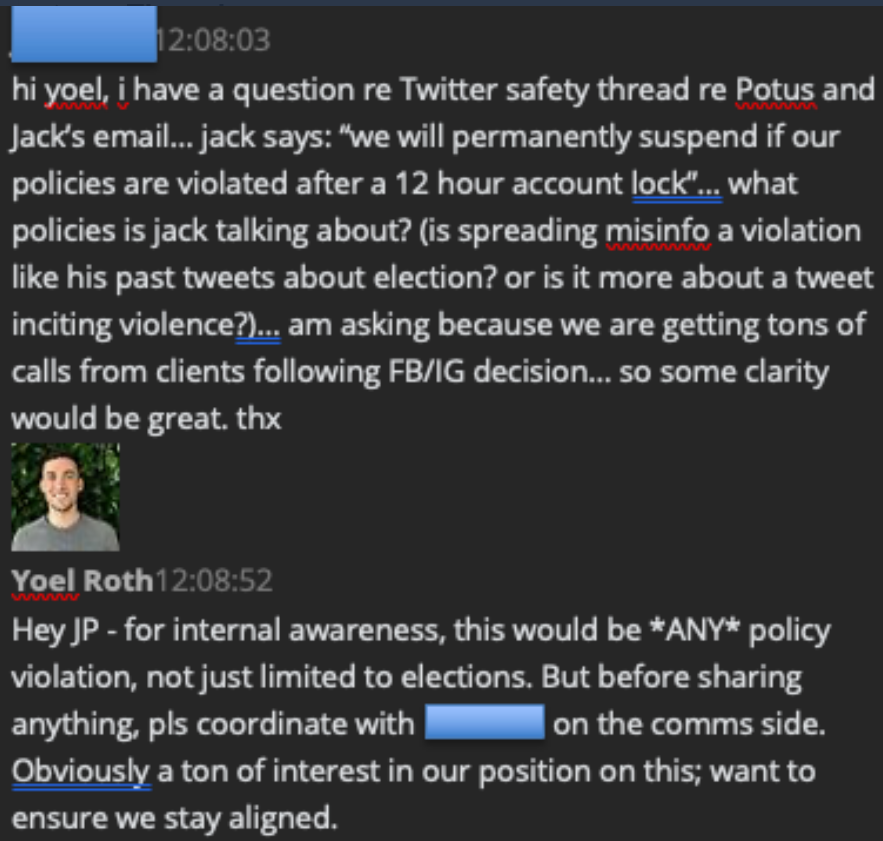

Around noon, a confused senior executive in advertising sales sends a DM to Roth.

Sales exec: “jack says: ‘we will permanently suspend [Trump] if our policies are violated after a 12 hour account lock’… what policies is jack talking about?”

Roth: “*ANY* policy violation”

What happens next is essential to understanding how Twitter justified banning Trump.

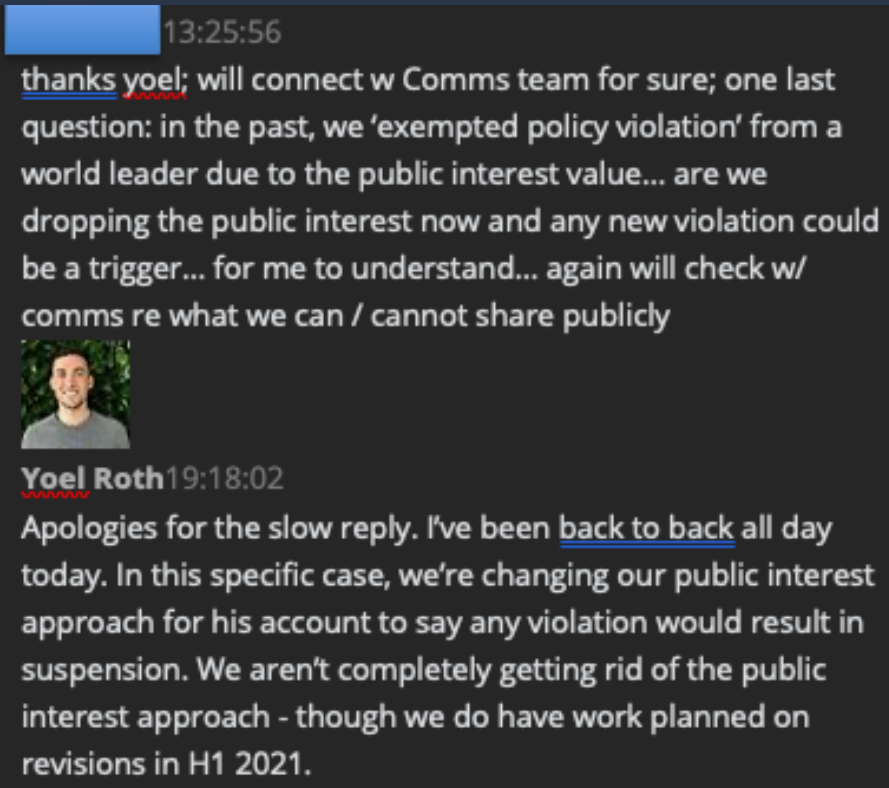

Sales exec: “are we dropping the public interest [policy] now…”

Roth, six hours later: “In this specific case, we’re changing our public interest approach for his account…”

The ad exec is referring to Twitter’s policy of “Public-interest exceptions,” which allows the content of elected officials, even if it violates Twitter rules, “if it directly contributes to understanding or discussion of a matter of public concern”

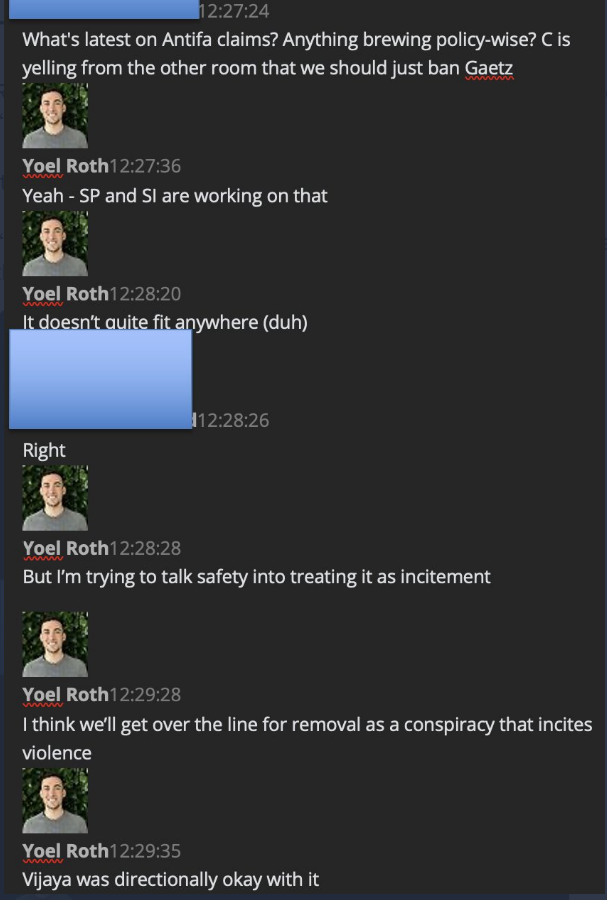

Roth pushes for a permanent suspension of Rep. Matt Gaetz even though it “doesn’t quite fit anywhere (duh)”

It’s a kind of test case for the rationale for banning Trump.

“I’m trying to talk [Twitter’s] safety [team] into… removal as a conspiracy that incites violence.”

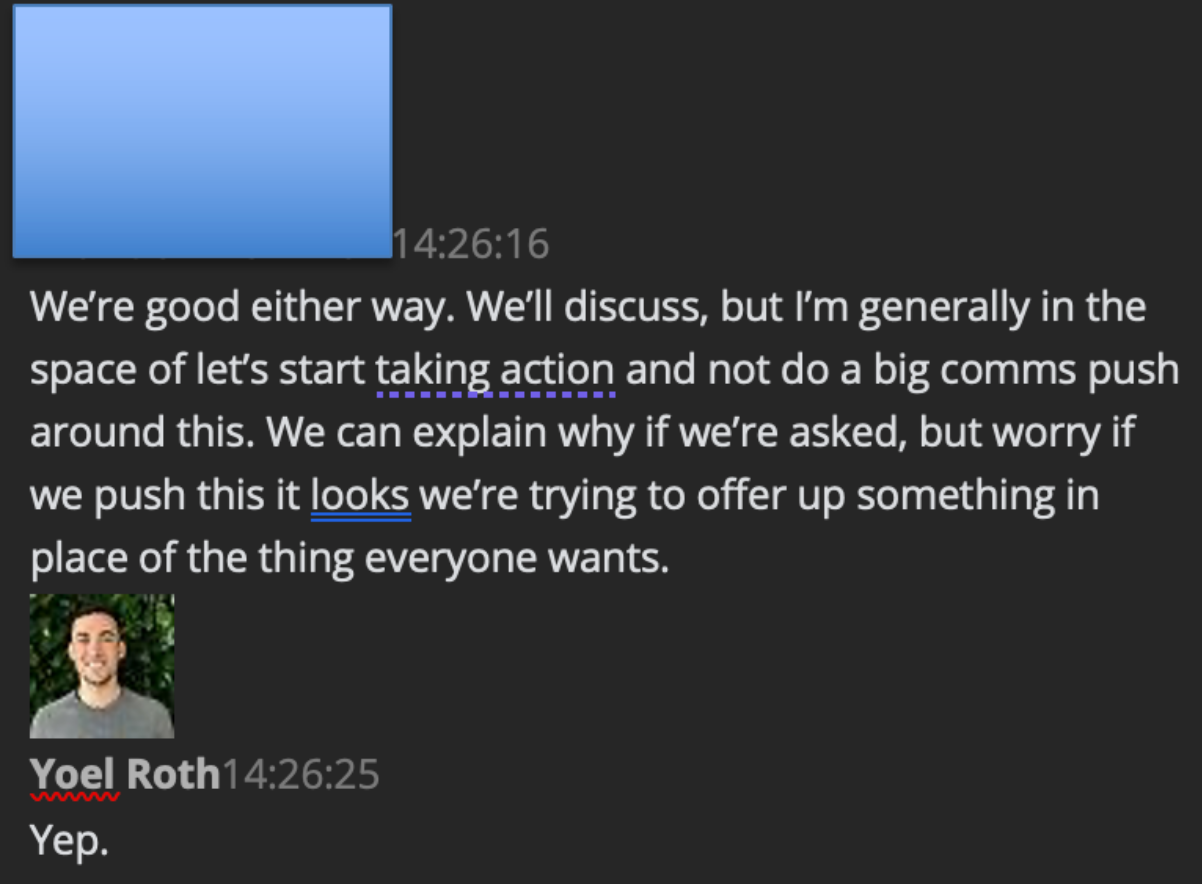

Around 2:30, comms execs DM Roth to say they don’t want to make a big deal of the QAnon ban to the media because they fear “if we push this it looks we’re trying to offer up something in place of the thing everyone wants,” meaning a Trump ban.

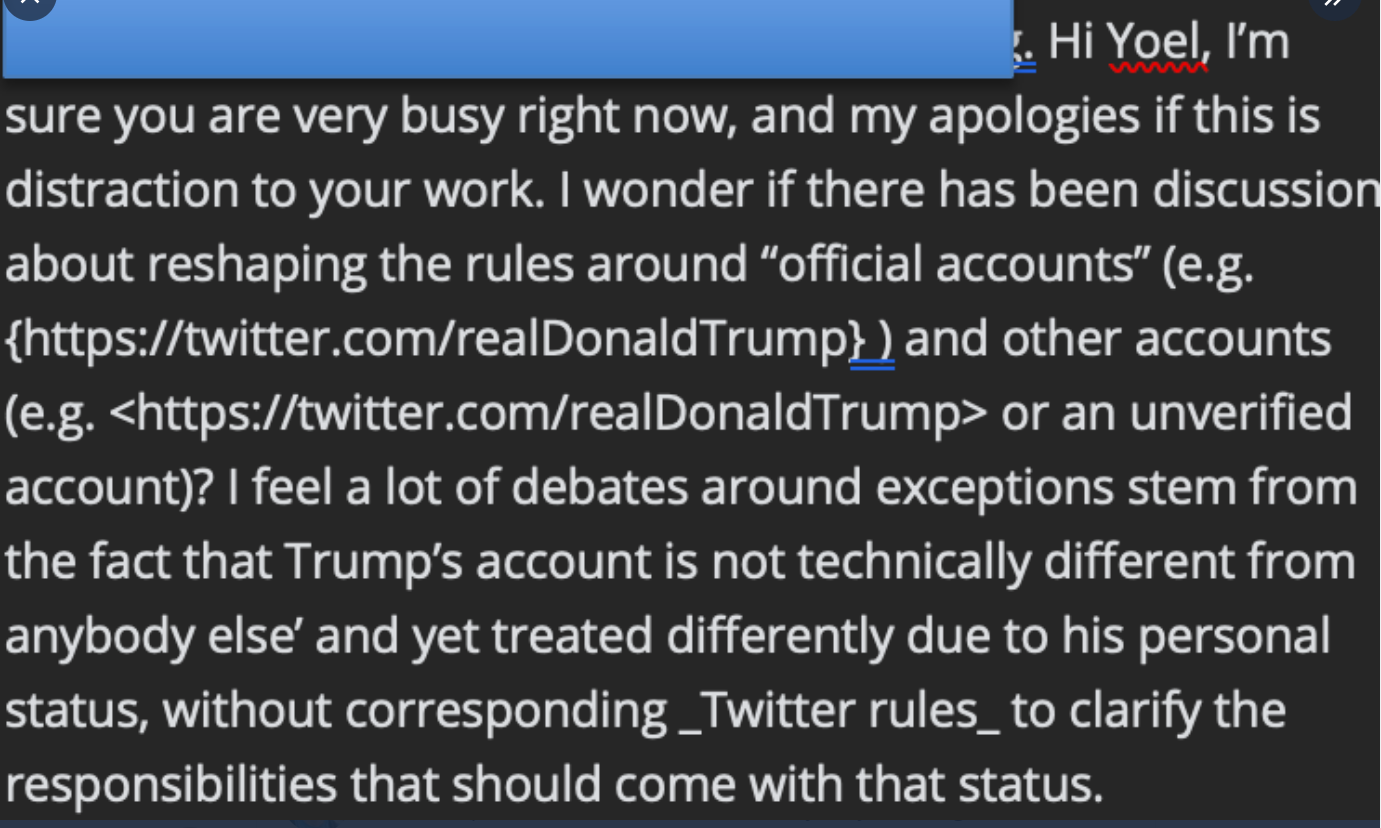

That evening, a Twitter engineer DMs to Roth to say, “I feel a lot of debates around exceptions stem from the fact that Trump’s account is not technically different from anybody else’ and yet treated differently due to his personal status, without corresponding _Twitter rules_..”

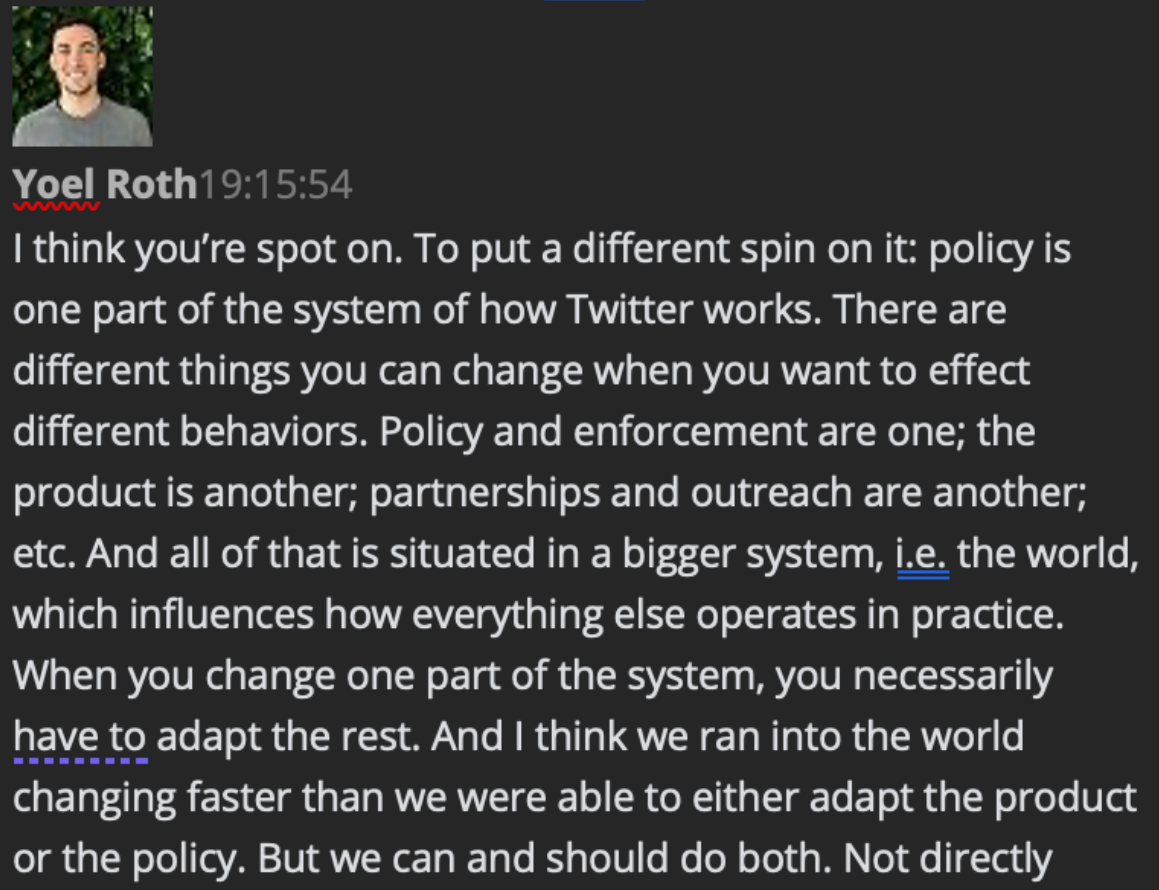

Roth’s response hints at how Twitter would justify deviating from its longstanding policy. “To put a different spin on it: policy is one part of the system of how Twitter works… we ran into the world changing faster than we were able to either adapt the product or the policy.”

The evening of January 7, the same junior employee who expressed an “unpopular opinion” about “ad hoc decisions… that don’t appear rooted in policy,” speaks up one last time before the end of the day.

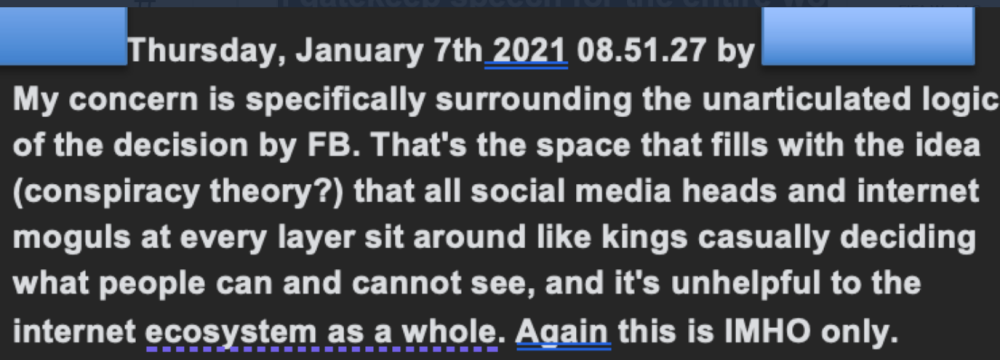

Earlier that day, the employee wrote, “My concern is specifically surrounding the unarticulated logic of the decision by FB. That space fills with the idea (conspiracy theory?) that all… internet moguls… sit around like kings casually deciding what people can and cannot see.”

The employee notes, later in the day, “And Will Oremus noticed the inconsistency too…,” linking to an article for OneZero at Medium called, “Facebook Chucked Its Own Rulebook to Ban Trump.”

“The underlying problem,” writes Will Oremus, is that “the dominant platforms have always been loath to own up to their subjectivity, because it highlights the extraordinary, unfettered power they wield over the global public square and places the responsibility for that power on their own shoulders… So they hide behind an ever-changing rulebook, alternately pointing to it when it’s convenient and shoving it under the nearest rug when it isn’t.”

Facebook’s suspension of Trump now puts Twitter in an awkward position. If Trump does indeed return to Twitter, the pressure on Twitter will ramp up to find a pretext on which to ban him as well.”

Anyone who has been paying attention knows that Trump did not incite violence on January 6th (or at any other time). In fact, he urged his supporters to respect law enforcement and remain peaceful.

But Twitter, from its executives to its employees, were hell-bent on removing a sitting president from the public square. And as you know… that’s exactly what happened.

Part 5 is coming soon. For the sake of democracy and free speech – don’t miss it.

Leave a Reply