A new TwitterFiles investigation by journalist Bari Weiss reveals that teams of Twitter employees build blacklists, prevent disfavored tweets from trending, and actively limit the visibility of entire accounts or even trending topics—all in secret, without informing users.

This second installment of the ongoing journalistic investigation was actually delayed by several days – and the reason may surprise you. Matt Taibbi broke the news on Tuesday:

On Tuesday, Twitter Deputy General Counsel (and former FBI General Counsel) Jim Baker was fired. Among the reasons? Vetting the first batch of “Twitter Files” – without knowledge of new management.

The process for producing the “Twitter Files” involved delivery to two journalists via a lawyer close to new management. However, after the initial batch, things became complicated. Over the weekend, while we both dealt with obstacles to new searches, it was @BariWeiss who discovered that the person in charge of releasing the files was someone named Jim. When she called to ask “Jim’s” last name, the answer came back: “Jim Baker.”

“My jaw hit the floor,” says Weiss.

The first batch of files both reporters received was marked, “Spectra Baker Emails.”

Baker is a controversial figure. He has been something of a Zelig of FBI controversies dating back to 2016, from the Steele Dossier to the Alfa-Server mess. He resigned in 2018 after an investigation into leaks to the press.

The news that Baker was reviewing the “Twitter files” surprised everyone involved, to say the least. New Twitter chief Elon Musk acted quickly to “exit” Baker Tuesday.

Reporters resumed searches through Twitter Files material – a lot of it – today. The next installment of “The Twitter Files” will appear @bariweiss.

Here’s that story:

Twitter once had a mission “to give everyone the power to create and share ideas and information instantly, without barriers.” Along the way, barriers nevertheless were erected.

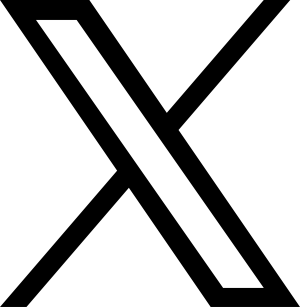

Take, for example, Stanford’s Dr. Jay Bhattacharya (@DrJBhattacharya) who argued that Covid lockdowns would harm children. Twitter secretly placed him on a “Trends Blacklist,” which prevented his tweets from trending.

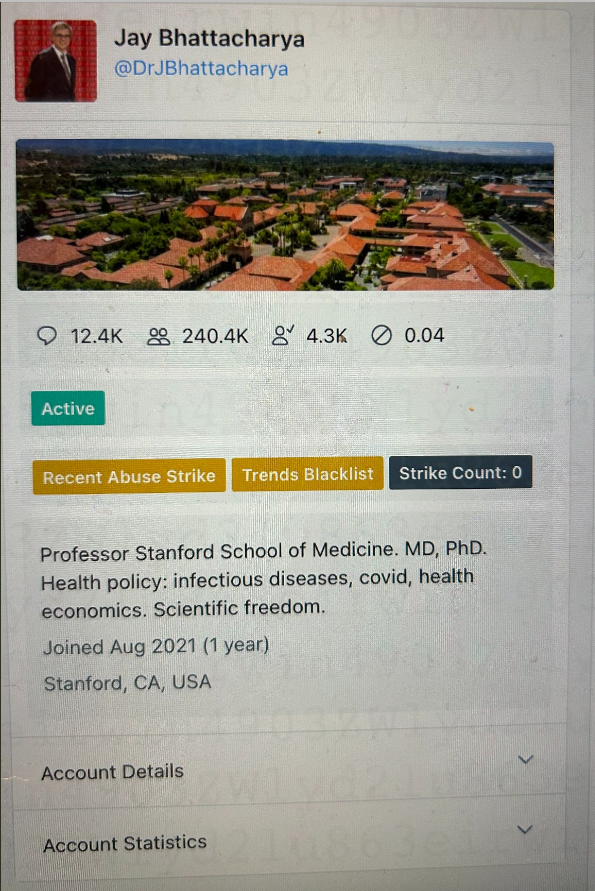

Or consider the popular right-wing talk show host, Dan Bongino (@dbongino), who at one point was slapped with a “Search Blacklist.”

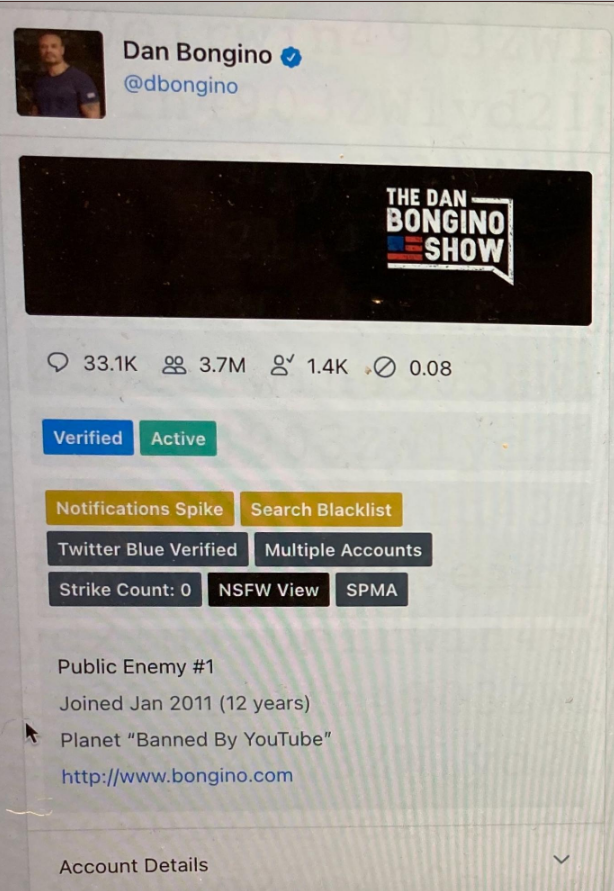

Twitter set the account of conservative activist Charlie Kirk (@charliekirk11) to “Do Not Amplify.”

Twitter denied that it does such things. In 2018, Twitter’s Vijaya Gadde (then Head of Legal Policy and Trust) and Kayvon Beykpour (Head of Product) said: “We do not shadow ban.” They added: “And we certainly don’t shadow ban based on political viewpoints or ideology.”

What many people call “shadow banning,” Twitter executives and employees call “Visibility Filtering” or “VF.” Multiple high-level sources confirmed its meaning.

“Think about visibility filtering as being a way for us to suppress what people see to different levels. It’s a very powerful tool,” one senior Twitter employee told us.

“VF” refers to Twitter’s control over user visibility. It used VF to block searches of individual users; to limit the scope of a particular tweet’s discoverability; to block select users’ posts from ever appearing on the “trending” page; and from inclusion in hashtag searches.

All without users’ knowledge.

“We control visibility quite a bit. And we control the amplification of your content quite a bit. And normal people do not know how much we do,” one Twitter engineer told us. Two additional Twitter employees confirmed. The group that decided whether to limit the reach of certain users was the Strategic Response Team – Global Escalation Team, or SRT-GET. It often handled up to 200 “cases” a day.

But there existed a level beyond official ticketing, beyond the rank-and-file moderators following the company’s policy on paper. That is the “Site Integrity Policy, Policy Escalation Support,” known as “SIP-PES.” his secret group included Head of Legal, Policy, and Trust (Vijaya Gadde), the Global Head of Trust & Safety (Yoel Roth), subsequent CEOs Jack Dorsey and Parag Agrawal, and others.

This is where the biggest, most politically sensitive decisions got made. “Think high follower account, controversial,” another Twitter employee told us. For these “there would be no ticket or anything.”

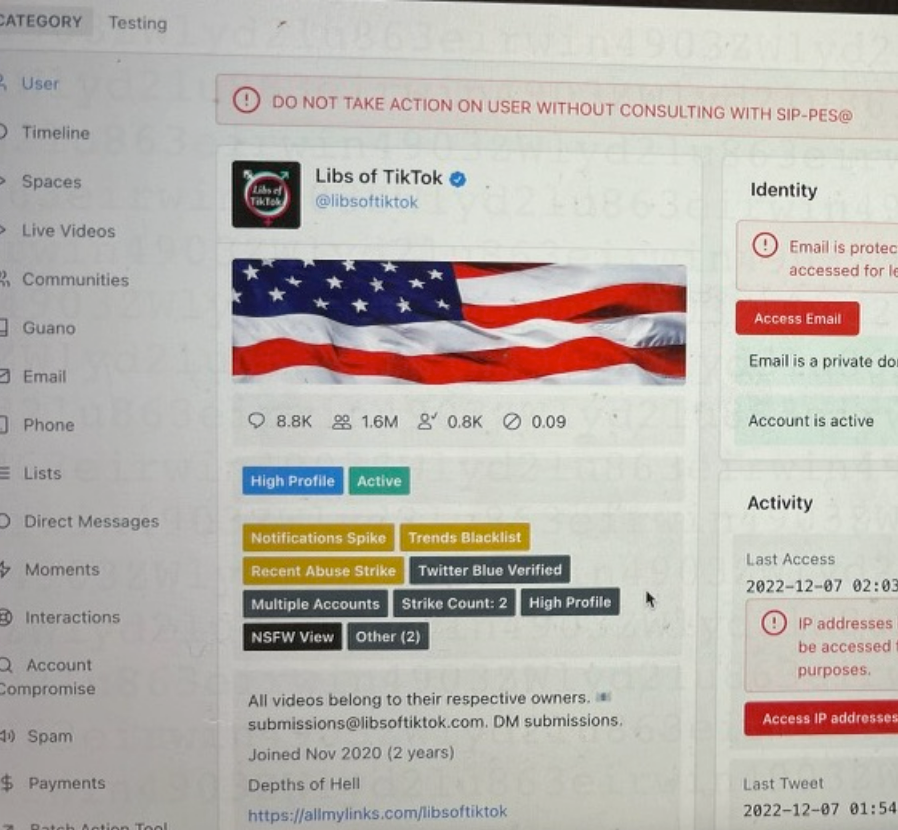

One of the accounts that rose to this level of scrutiny was @libsoftiktok — an account that was on the “Trends Blacklist” and was designated as “Do Not Take Action on User Without Consulting With SIP-PES.”

The account — which Chaya Raichik began in November 2020 and now boasts over 1.4 million followers — was subjected to six suspensions in 2022 alone, Raichik says. Each time, Raichik was blocked from posting for as long as a week. Twitter repeatedly informed Raichik that she had been suspended for violating Twitter’s policy against “hateful conduct.”

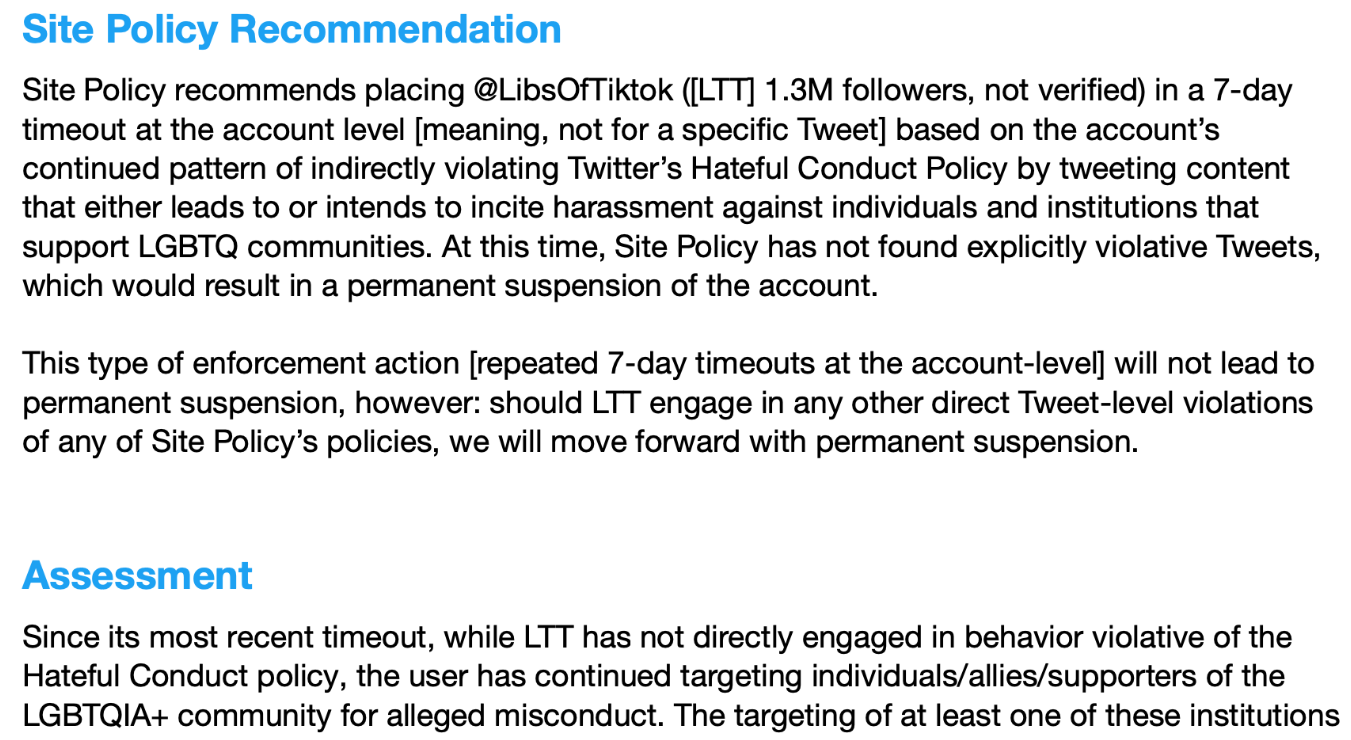

But in an internal SIP-PES memo from October 2022, after her seventh suspension, the committee acknowledged that “LTT has not directly engaged in behavior violative of the Hateful Conduct policy.” See here:

The committee justified her suspensions internally by claiming her posts encouraged online harassment of “hospitals and medical providers” by insinuating “that gender-affirming healthcare is equivalent to child abuse or grooming.”

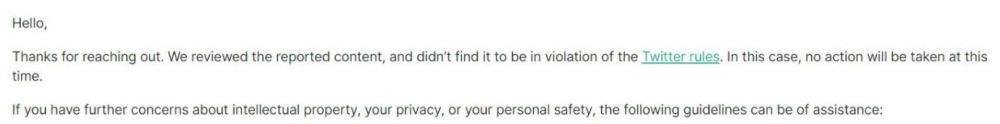

Compare this to what happened when Raichik herself was doxxed on November 21, 2022. A photo of her home with her address was posted in a tweet that has garnered more than 10,000 likes.

When Raichik told Twitter that her address had been disseminated she says Twitter Support responded with this message: “We reviewed the reported content, and didn’t find it to be in violation of the Twitter rules.” No action was taken. The doxxing tweet is still up.

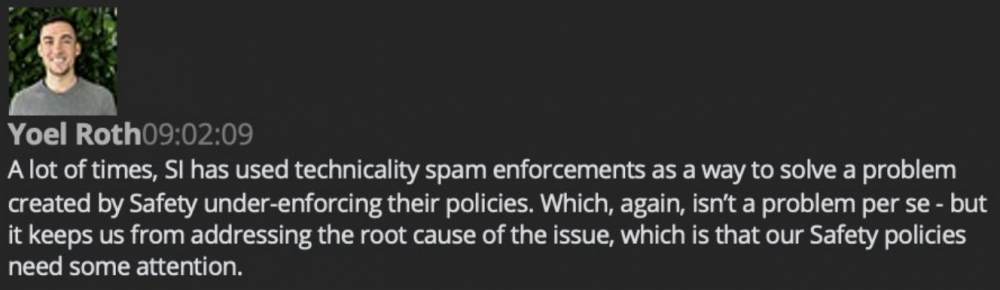

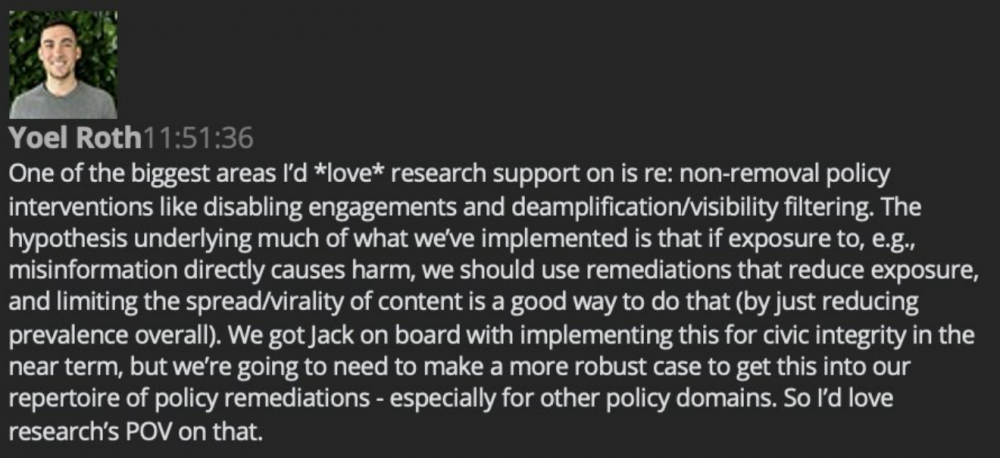

In internal Slack messages, Twitter employees spoke of using technicalities to restrict the visibility of tweets and subjects. Here’s Yoel Roth, Twitter’s then Global Head of Trust & Safety, in a direct message to a colleague in early 2021:

Six days later, in a direct message with an employee on the Health, Misinformation, Privacy, and Identity research team, Roth requested more research to support expanding “non-removal policy interventions like disabling engagements and deamplification/visibility filtering.”

Roth wrote: “The hypothesis underlying much of what we’ve implemented is that if exposure to, e.g., misinformation directly causes harm, we should use remediations that reduce exposure, and limiting the spread/virality of content is a good way to do that.”

He added: “We got Jack on board with implementing this for civic integrity in the near term, but we’re going to need to make a more robust case to get this into our repertoire of policy remediations – especially for other policy domains.”

More information is being released as researchers continue to comb through the mountain of internal communications provided by Musk. We’re also wrapping up a thorough review of the deposition of FBI Special Agent Elvis Chan, which was made public recently.

Stay tuned. This story is far from over…

Leave a Reply